The state of tracking using Augmented Reality in iOS

Note: This article was written in April 2022.

The state of tracking using Augmented Reality in iOS

Augmented Reality (AR) brings together a number of different technical disciplines and continues to become more and more interesting as these, as well as AR as a whole, are progressed. With this in mind, AR is no longer a gimmick but a viable option to add value to existing products or even build completely new products.

Because AR is new technology and requires a range of skillsets, developing with it adds additional complexity; and with complexity comes time and cost. This cost might be longer timelines as developers and designers build UI that might need additional interactions after testing, edge cases that weren’t previously considered might cause problems, or the fact that not all devices have the same AR capabilities could introduce branched logic or much smaller user bases than standard apps.

Some easy value adds for iOS apps would be anything that builds upon existing session configurations and already established AR practices. Working with Apple's frameworks is quite straightforward for current iOS developers as the same standards and expectations are all there. We should expect this space to change quickly though, as many leading tech companies and innovative startups are all betting big on AR's potential. These players are already solving many of the major problems the technology faces, whether that’s with mainstream hardware or by building more usable design paradigms based on their findings.

Building custom tracking, rendering, or similar outside the already defined design and technology scope would likely open the floodgates to large amounts of complexity and a final product that may not be able to live up to mainstream usability and technology expectations.

This should not discourage but instead inspire keen innovators to build experiences that add unique value for their products and users. As the space continues to grow, investment in the space now has the potential to differentiate in saturated markets and let new industry leaders take charge.

What is Augmented Reality?

Augmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities…

Why not Virtual Reality?

In virtual reality (VR), the users' perception of reality is completely based on virtual information. In augmented reality (AR) the user is provided with additional computer generated information within the data collected from real life that enhances their perception of reality. For example, in architecture, VR can be used to create a walk-through simulation of the inside of a new building; and AR can be used to show a building's structures and systems super-imposed on a real-life view.

AR allows us to augment existing real world experiences, anything from trying on clothes from home, speeding up grocery shopping, to working on a car, with additional details. This might be where to find the pizza at the supermarket, what size screwdriver you need for the screw you are looking at, or maybe to try on a funny face filter or makeup.

What is already out there?

While AR technology is quite well established, it is still not commonplace. Mission to Mars AR is an example of an app that does not need to be in AR, but uses the augmentation to improve the experience, and make it much more memorable. This is similar to most other apps and AR games, where AR is not an essential part of the functionality.

Apple often uses IKEA Place as an example of what a great AR experience looks like. This allows users to see how IKEA furniture would look in their house.

Andrew Hart’s shopping sample is an app where AR is essential to the experience and used in a creative way. Using what in production would likely be a number of data points, like beacons and computer vision identifying where in the store the user is, and what they are looking at, we can simplify existing experiences, which would likely have a direct impact on the number of sales a store might make.

This leads us to a common app design problem: if you want someone to use your app regularly, you probably have to encourage them. Who wants to have to worry about opening an app, not to mention running around holding the phone up? Pokémon GO managed to avoid this by making holding the phone out and interacting a core part of the enjoyment. But how can you prompt someone to take out their phone to interact with your more involved AR experience?

Apple recommends app clips. Small QR codes or NFC embedded stickers, that can open a smaller version of the app allowing people to quickly pick up the experience wherever they are. Another option might be a notification when you enter a location that your experience is relevant to, like a store or restaurant.

How do we make AR apps on iOS?

The choice between native and cross platform applications is never an easy one and there are many considerations to make. In the case of an AR product, notable considerations would be performance (which leads to both smooth experience as well as battery saving), binary size (which allows users to keep your app installed without storage bloat), maturity of technology, and interaction with device capabilities. All of these lean towards a native solution.

What native solutions exist?

Apple offers ARKit, the framework which offers a high level API to integrate AR content into your apps. Built with ARKit, Apple has created RealityKit, a higher level 3D computer graphics and physics engine, that includes an entity component system for AR experience creation.

RealityKit allows importing of standard 3D files and collections (like Pixar's USDZ) right into the app for rendering. It also includes importing reality files for artist authored content created in reality composer, a no code AR content creation environment, where you can do anything from scene layout to basic object level animation.

Device limitations

ARKit requires iOS 11.0 or later and an iOS device with an A9 or later processor. However most of the meaningful features require or recommend an A12 processor and up with iOS 15. This is to make sure apps can meet Apple’s quality and performance standards and to make use of hardware accelerated graphics and neural network computation.

We do need to check we have these capabilities, as well as permission to use them. There are a couple of recommended user friendly ways to check for this:

If the basic functionality of your app requires AR: Add the

key in thearkit

section of your app's Info.plist file. Using this key makes your app available only to ARKit-compatible devices.UIRequiredDeviceCapabilitiesIf augmented reality is a secondary feature of your app: Check for whether the current device supports the AR configuration you want using

ARConfiguration.isSupportedIf your app uses face-tracking AR: Face tracking requires the front-facing TrueDepth camera on iPhone X. Your app remains available on other devices, so you must test the

property to determine face-tracking support on the current device. When using face tracking, Apple also requires you to include a privacy policy describing to users how you intend to use face tracking and face data.ARFaceTrackingConfiguration.isSupported

Now how do we make something?

AR experiences in ARKit are built around the concept of sessions that identify anchors. We attach nodes to anchors to render them in our scene. Nodes can also be attached to other nodes for more complex behaviour.

ARAnchorARPlaneAnchor,ARSCNViewSCNNodeARSessionThere are a number of configurations for sessions which each serve a specific purpose and each with its own tracking capabilities and trade offs. This investigation focused on

ARWorldTrackingConfigurationPlacing a 3D object, like a cube, on a table in the virtual world

As always there are a couple of ways to do this, but given what we know above, we want to configure our session to identify a tracking anchor that is on a table. We then want to add a node which represents the cube to the anchor. And finally we want to set the scene realistically, draw with perspective, and realistically light this cube in 3D space. How do we configure our session?

This is exactly what the world tracking configuration is for:

1let configuration =ARWorldTrackingConfiguration()2configuration.planeDetection =[.horizontal,.vertical]3return configurationThis configuration has the capability to make use of the device sensors to identify horizontal and vertical planes in the real world. This could be anything from a desk, the seat of a chair to a monitor or picture frame. This isn’t just limited to single objects, it might also identify the floor, the wall, or the ceiling.

Planes in ARKit represent real surfaces. Above we saw

ARPlaneAnchorHow do we identify a valid tracking anchor?

Now that we have some viable plane anchors for tracking, we want the cube to be on a table. Planes actually have a classification. We can use this to check that we have a table surface by checking the

ARPlaneAnchor.ClassificationNow we can add our cube to the plane. We do this by adding a node to the scene in the scene delegate render function.

func renderer(_ renderer: SCNSceneRenderer,

didAdd node: SCNNode,

for anchor: ARAnchor) {

// Place content only for anchors found by plane detection.

guard let planeAnchor = anchor as? ARPlaneAnchor else { return } // Create a custom object to visualize the plane geometry and extent.

let cube = Cube(anchor: planeAnchor, in: sceneView) // Add the visualization to the ARKit-managed node so that it tracks

// changes in the plane anchor as plane estimation continues.

node.addChildNode(cube)

}CubeSCNNodeRendering is handled by SceneKit, but ARKit is able to make some intelligent decisions to inform how the model should be lit. SceneKit makes use of standard real time rasterisation techniques to draw the model to screen with correct perspective and realistic lighting and shading.

Lighting through our

ARSCNView

How well does the tracking work?

While without much work, the tracking is very robust, there are a few cases that cause it to fall apart.

Tracking does not work if something like a plant is moving in front of the target plane, or when all known tracking data is obstructed. Sometimes we also need to know more about a scene when there are not enough descriptive surfaces, like a cat on a bed.

People can occlude the anchored object if correctly setup, this is handled by an apple ML model. This is only for people however. We need additional hardware support to occlude other objects.

Moving the device too quickly, such as shaking or jolting side to side is fine, especially if the start and end points are a similar image. If you were to create a product that relied on this however, you would need to do more testing, while this works fine in limited scope, there are moments where it takes a couple of seconds to realise where the device has been moved, causing the rendered objects to jump to the correct location.

This also works quite well at large distances. All sensors together allow you to travel far enough back that the object rendered is almost too small to be visible. Slight errors do add up though, but as you return to the object, this can correct itself as it recognises the environment.

One area that can cause issues is trying to track in a featureless environment like a plain wall. This works quite well by default, relying on other sensors than just the camera, but falls apart the larger the distance you move without features. Even just a skirting board, a corner, a mark on a wall, or lighting changes as you move along the surface is enough to completely negate this.

In the dark, tracking struggles to find planes and map out the environment, the visual mapping is such an important part of the data collection. The other sensors are not strong enough on their own.

One option could be using the device light; this would not work great on large scales, and since it causes shadows to move when the device moves, this is not ideal for tracking and not recommended.

LiDAR

LiDAR ("light detection and ranging" or "laser imaging, detection, and ranging") is a method for determining distance by targeting an object or a surface with a laser and measuring the time for the reflected light to return to the receiver.

Apple devices' LiDAR scanner accurately measures the distance to surrounding objects up to 5 meters away, and has been included on all pro iOS and iPadOS devices since the iPad Pro of 2020.

LiDAR is the solution to many of the previously mentioned problems and is simple to use. Enabling a single boolean is all that is required to make full use of it, including more accurate object edge detection and object distance checking for effects like clouds or distance blur.

This leads to difficult product decisions. Since many enthusiasts, or devices brought to be an AR client, will probably have LiDAR, it is worth supporting both high end gpu and lidar devices, as well as defaulting to “lite” functionality when this is not present. The question then becomes how important LiDAR driven functionality is to a particular use case.

Identifying tracking issues

AR works fine with most movement, including changes of elevation, as long as there is enough tracking data. You can look under an object, turn around it, look away and look back and hold the phone upside down without issue. But what if there isn’t enough tracking data?

Thankfully, we don’t need to measure this, we can instead use the earlier mentioned ARSCNViewDelegate functions to check the session quality. Our session has the property current frame, which has the property camera, which has a tracking state.

1guardlet frame = session.currentFrame else{return}2updateSessionInfoLabel(for: frame, trackingState: frame.camera.trackingState)The tracking state tells us quite a bit about ARKits confidence in the tracking data and is classified as either

notAvailablenormallimited(TrackingState.Reason)Using the

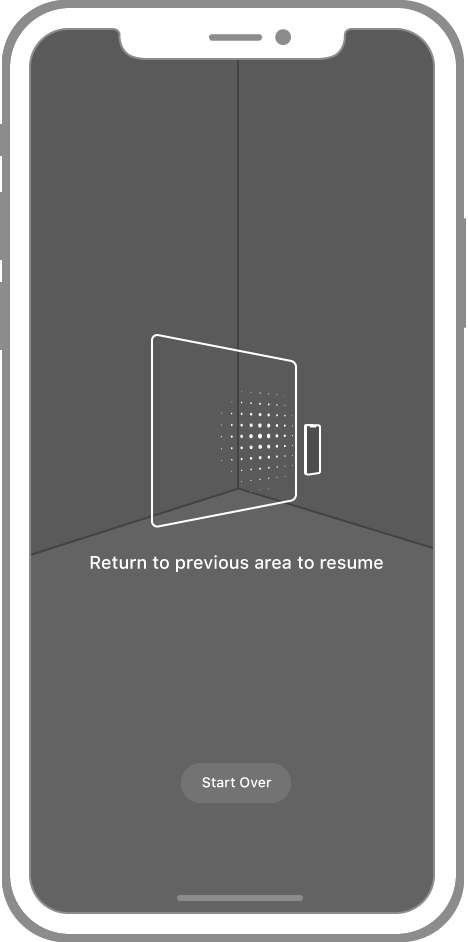

ReasonTrackingState.Reason.initalizingTrackingState.Reason.relocalizingTrackingState.Reason.excessiveMotionTrackingState.Reason.insufficientFeaturesResolving tracking issues with UX

Depending on the quality of experience we as developers are looking to create, we may wish to use this info to end the experience, but we can also use this to prompt the user to do something that may improve the tracking. The AR session will resume normal operation once tracking issues are resolved.

Share